The health check endpoint provides the details of how our application is doing. In this post, we will show how to add health checks to your NestJS application. If you want to learn about enabling CORS in your NestJS application, you can read more about it here.

Why add Health Checks?

Once you build and deploy your application, you need to know if your application is running smoothly in an easier way without making some application business logic calls. Health checks offer a way to check if the database is running smoothly, your storage disk is fine, and your application service is executing as intended.

The most important reason you need health checks is so you can continue to monitor your application. A metrics collection service (like Micrometer) can continue to validate the application. It can verify that there are no software or hardware failures. Any moment, there is any software or hardware failure, it can trigger a notification for manual or automatic intervention to get the application back on track. This improves the reliability of the application.

Health checks in NestJS Application

In NestJS framework, Terminus library offers a way to integrate readiness/liveness health checks. A service or component of infrastructure will continuously hit a GET endpoint. Service will take action based on the response.

Let’s get started. We will add the terminus library to our NestJS application.

npm install @nestjs/terminus.

Terminus integration offers graceful shutdown as well Kubernetes readiness/liveness check for http applications. Liveness check tells if the container is up and running. A readiness check tells if the container is ready to accept incoming requests.

We will also set up a number of checks for database, memory, disk, and redis in this post to show how health checks work.

How to set up a Health check in NestJS?

Once we have added nestjs/terminus package, we can create a health check endpoint and include some predefined indicators. These indicators include HTTP check, Database connectivity check, Memory and Disk check.

Depending on what ORM, you are using, nestjs offers some inbuilt packages like TypeORM or Sequlize health check indicators.

The health check will provide us with a combination of indicators. This set of indicators provides us with information to indicate how our application is doing.

DiskHealthIndicator

Let’s start with how the server’s hard disk is doing.

DiskHealthIndicator contains the check for disk storage of the current machine.

Once we add DiskHealthIndicator in our health controller, we will check for storage as follows:

this.disk.checkStorage('diskStorage', { thresholdPercent: 0.5, path: 'C:\\'});

HttpHealthIndicator

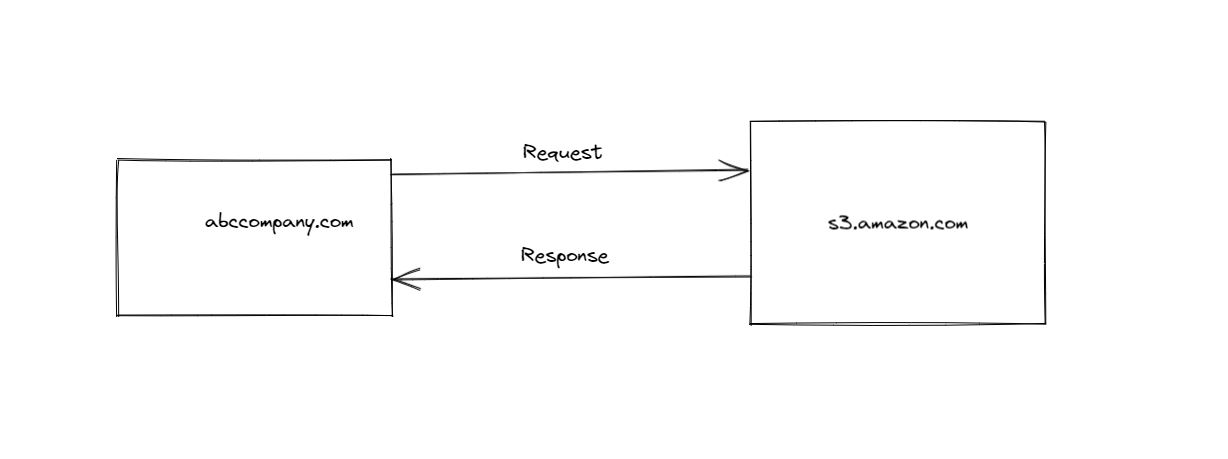

HttpHealthIndicator will provide the details of our HTTP application and if it is up and running. Explicitly, we will add @nestjs/axios package to our project.

npm install @nestjs/axios.

Additionally. we will use pingCheck method to verify if we are able to connect to the application.

this.http.pingCheck('Basic check', 'http://localhost:3000');

MemoryHealthIndicator

Overall, MemoryHealthIndicator provides the details of the memory of the machine on which the application is running.

this.memory.checkHeap('memory_heap', 300*1024*1024);

this.memory.checkRSS('memory_rss',300*1024*1024);

Database Health Check

Assuming your application is using a database, you will need a database health check. Subsequently, nestjs/terminus provides database health check through ORM packages like TypeORM, Sequelize, or Mongoose. As part of this demo, we will create a custom database health check since we use Prisma ORM.

NestJS Application

In any case, let’s create nestjs application with nestjs/cli.

nest new healthcheckdemo.

As previously stated, we will use Prisma ORM.

npm install prisma --save-dev.

This will install Prisma cli. Now if we run npx prisma init , it will create a barebone of schema.prisma file where we will create our database model schema.

In this application, I am using a simple schema where a user can sign up to create posts. I am also using the MySQL database. This schema will look like the below:

// This is your Prisma schema file,

// learn more about it in the docs: https://pris.ly/d/prisma-schema

generator client {

provider = "prisma-client-js"

engineType = "binary"

}

datasource db {

provider = "mysql"

url = env("DATABASE_URL")

}

model User {

id Int @default(autoincrement()) @id

email String @unique

name String?

posts Post[]

}

model Post {

id Int @default(autoincrement()) @id

title String

content String?

published Boolean? @default(false)

author User? @relation(fields: [authorId], references: [id])

authorId Int?

}

By default, Prisma will create .env file if it was not there before. It will also add a default variable for DATABASE_URL.

If we run npm run prisma migrate dev, it will create those database tables in our DB.

Further, let’s create an app module in our sample application for healthcheckdemo.

import { Module } from '@nestjs/common';

import { PrismaClient } from '@prisma/client';

import { AppController } from './app.controller';

import { AppService } from './app.service';

import { UserService } from './user.service';

import { HealthModule } from './health/health.module';

import { HttpModule } from '@nestjs/axios';

import { PrismaService } from './prisma.service';

@Module({

imports: [HealthModule, HttpModule],

controllers: [AppController],

providers: [AppService, UserService, PrismaClient, PrismaService,],

})

export class AppModule {}

We will also create HealthModule that will serve the purpose for the HealthController.

import { Module } from '@nestjs/common';

import { TerminusModule } from '@nestjs/terminus';

import { PrismaService } from 'src/prisma.service';

import { HealthController } from './health.controller';

import { PrismaOrmHealthIndicator } from './prismaorm.health';

@Module({

imports: [

TerminusModule,

],

controllers: [HealthController],

providers: [ PrismaOrmHealthIndicator, PrismaService]

})

export class HealthModule {}

In this HealthModule, you will notice there is PrismaOrmHealthIndicator. Before we dive into PrismaOrmHealthIndicator, we need to generate Prisma Client .

npm install @prisma/client will generate the Prisma client for your database model. This will expose CRUD operations for your database model, making it easier for developers to focus on business logic rather than how to access data from a database.

We will abstract away Prisma Client APIs to create database queries in a separate service PrismaService. This service will also instantiate Prisma Client.

import { INestApplication, Injectable, OnModuleInit } from '@nestjs/common';

import { PrismaClient } from '@prisma/client';

@Injectable()

export class PrismaService extends PrismaClient implements OnModuleInit {

async onModuleInit() {

await this.$connect();

}

async enableShutdownHooks(app: INestApplication) {

this.$on('beforeExit', async () => {

await app.close();

});

}

}

There is a documented issue with enableShutdownHooks. We will use enableShutdownHooks call when closing the application.

Health Controller

For a health check, we will need a health controller. We talked about the health module in the previous section, There are two important pieces left before we show how the health check will look.

Let’s create a health controller.

nest g controller health

This will generate a controller for us.

import { Controller, Get, Inject } from '@nestjs/common';

import { DiskHealthIndicator, HealthCheck, HealthCheckService, HttpHealthIndicator, MemoryHealthIndicator, MicroserviceHealthIndicator } from '@nestjs/terminus';

import { PrismaOrmHealthIndicator } from './prismaorm.health';

@Controller('health')

export class HealthController {

constructor(

private health: HealthCheckService,

private http: HttpHealthIndicator,

@Inject(PrismaOrmHealthIndicator)

private db: PrismaOrmHealthIndicator,

private disk: DiskHealthIndicator,

private memory: MemoryHealthIndicator,

) {}

@Get()

@HealthCheck()

check() {

return this.health.check([

() => this.http.pingCheck('basic check', 'http://localhost:3000'),

() => this.disk.checkStorage('diskStorage', { thresholdPercent: 0.5, path: 'C:\\'}),

() => this.db.pingCheck('healthcheckdemo'),

() => this.memory.checkHeap('memory_heap', 300*1024*1024),

() => this.memory.checkRSS('memory_rss', 300*1024*1024),

// Mongoose for MongoDB check

// Redis check

]);

}

}

In the health controller, we have a GET endpoint /health to provide details of how our application, memory of the machine, storage disk, and databases are doing. NestJs do not offer any ORM Health indicator for Prisma. So I am writing a custom indicator to find out database health.

By and large, this custom Prisma health indicator will be:

import { Injectable, InternalServerErrorException } from "@nestjs/common";

import { HealthIndicator, HealthIndicatorResult } from "@nestjs/terminus";

import { PrismaService } from "src/prisma.service";

@Injectable()

export class PrismaOrmHealthIndicator extends HealthIndicator {

constructor(private readonly prismaService: PrismaService) {

super();

}

async pingCheck(databaseName: string): Promise {

try {

await this.prismaService.$queryRaw`SELECT 1`;

return this.getStatus(databaseName, true);

} catch (e) {

throw new InternalServerErrorException('Prisma check failed', e);

}

}

}

We are extending the abstract class HealthIndicator and implementing a method called pingCheck in this PrismaOrmHealthIndicator class. This method uses PrismaService to query the database that has been passed. We use SELECT 1 query. If the query is successful, we get the database status as true.

Also, note that this class PrismaOrmHealthIndicator is injectable and we are injecting that in our HealthController.

Now if we start the application and execute the endpoint, we will get the response as below:

{

"status": "ok",

"info": {

"basic check": {

"status": "up"

},

"diskStorage": {

"status": "up"

},

"healthcheckdemo": {

"status": "up"

},

"memory_heap": {

"status": "up"

},

"memory_rss": {

"status": "up"

}

},

"error": {},

"details": {

"basic check": {

"status": "up"

},

"diskStorage": {

"status": "up"

},

"healthcheckdemo": {

"status": "up"

},

"memory_heap": {

"status": "up"

},

"memory_rss": {

"status": "up"

}

}

}

As you can see, everything seems to be running fine. healthcheckdemo is the database name that I am using in MySQL.

Similarly, we can also add redis and mongoose as part of health checks in our NestJS application.

Conclusion

In this post, we create a simple NestJS application to demonstrate how to add health checks. The code for this post is available here.

If you have any feedback for this post OR my book Simplifying Spring Security, I would love to hear your feedback.