In this post, I will show how to implement basic authentication using Passport.js in a NestJS Application. So far in the NestJS series, we have covered

- How to use CORS in a NestJS Application

- Adding Health Checks in a NestJS Application

- Using Bull Queues in a NestJS application

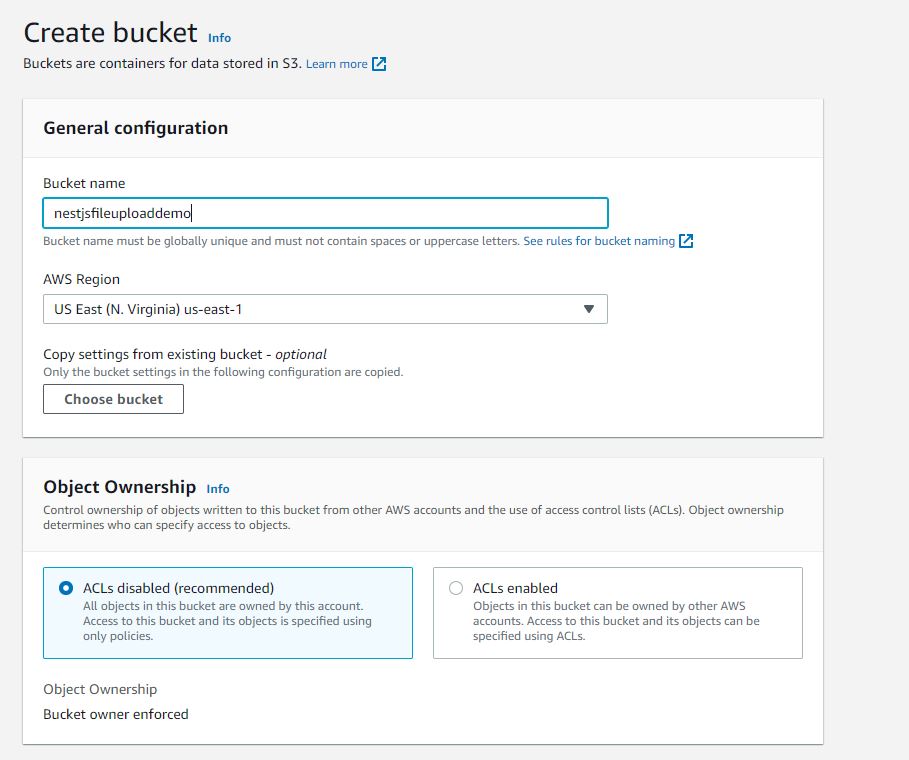

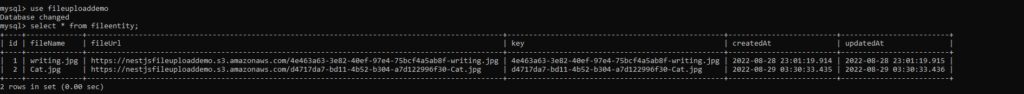

- Upload file to S3 using NestJS Application

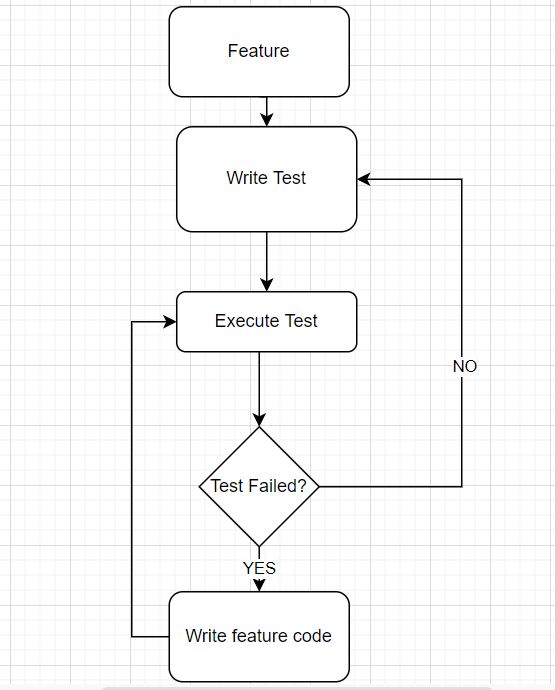

Basic Authentication

Basic authentication though not secure for production applications, has been an authentication strategy for a long time.

Usually, a user accesses the application and enters a username and password on the login screen. The backend server will verify the username and password to authenticate the user.

There are a few security concerns with basic authentication

- Password entered is plain text. It all depends on how the backend is handling the verification of passwords as well as the storage of passwords. The recommended way is to store the hash of the password when the account is created. Hashing is a one-way mechanism. So we will never know user passwords and if a database is breached, we won’t be exposing passwords.

- If we don’t use

re-captchamechanism, it is easy for attackers to attack with a DDOS attack.

Passport Library

We will use Passport library as part of this demo. Passport is authentication middleware for Node JS applications. NestJS Documentation also recommends using the passport library.

As part of using Passport library, you will implement an authentication strategy (local for basic authentication OR saml for SAML SSO).

In this implementation, we will implement a method validate to validate user credentials.

Let’s create a project for this demo and we will create two separate directories for frontend (ui) and backend.

Frontend application with React

In our ui directory, we will use reactjs framework to build the UI. If you are using react-scripts, we will start with

npx create-react-app ui

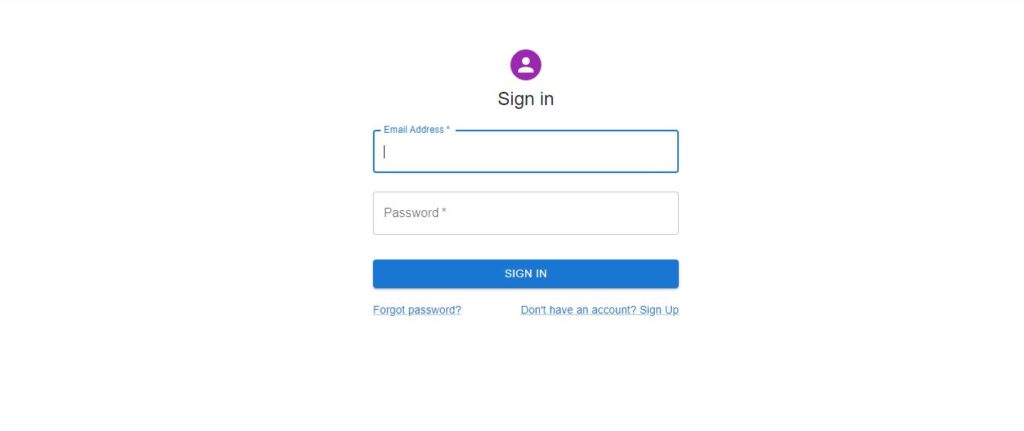

Create the login page

Once we have react app created, we have the bare bones of the app to make sure it is running. Now, we will add a login page where the user will enter credentials.

We will need two libraries in the login page Signin.js

axiosto call backend APIuseNavigationto navigate to different pages.

handleSubmit is a function that we will call when a user submits the form on the login screen.

const handleSubmit = async (event) => {

event.preventDefault();

const formData = new FormData(event.currentTarget);

const form = {

email: formData.get('email'),

password: formData.get('password')

};

const { data } = await axios.post("http://localhost:3000/api/v1/user/signin", form);

console.log(data);

if (data.status === parseInt('401')) {

setErrorMessage(data.response)

} else {

localStorage.setItem('authenticated', true);

setIsLoggedIn(true)

navigate('/home')

}

};

Once the user submits the form, handleSubmit collects submitted data and calls backend API with that form data.

User sign up

The sign in page can be of less help if there is no for users to sign up. Of course, it all depends on your user flow.

On the user signup page, we will be asking for firstName, lastName, email and password. Similar to signin page, we will have a handleSubmit function that will submit the signup form. It will call the backend API for signup.

let navigate = useNavigate();

const handleSubmit = async (event) => {

event.preventDefault();

const data = new FormData(event.currentTarget);

console.log(data);

const form = {

firstName : data.get('fname'),

lastName: data.get('lname'),

email: data.get('email'),

password: data.get('password')

};

await axios.post("http://localhost:3000/api/v1/user/signup", form);

navigate('/')

};

We will call this function handleSubmit on the event call onSubmit

Box component="form" noValidate onSubmit={handleSubmit} sx={{ mt: 3 }}

As far as the Home page is concerned, we have a simple home page that shows Welcome to Dashboard. The user will navigate to the home page if authenticated successfully.

Backend application with NestJS

Let’s look at the backend side of this application. I will not show the basics of creating a NestJS app and setting up Prisma as ORM. You can follow those details here .

We will create a user table as part of the Prisma setup.

// This is your Prisma schema file,

// learn more about it in the docs: https://pris.ly/d/prisma-schema

generator client {

provider = "prisma-client-js"

}

datasource db {

provider = "mysql"

url = env("DATABASE_URL")

}

model User {

id String @id @default(uuid())

email String @unique

first_name String

last_name String?

password String

createdAt DateTime @default(now())

updatedAt DateTime @updatedAt

}

When a user signs up for our application, we will store that information in User table.

Controller for backend APIs

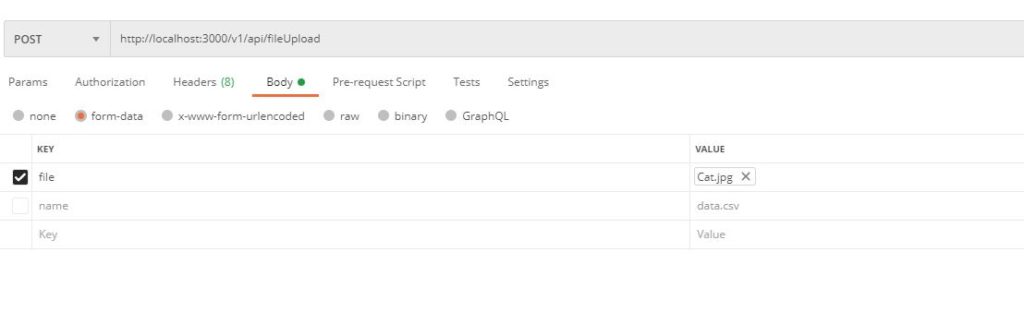

We will need two APIs – one for signup and one for sign-in. We already showed in the frontend section about the sign-up page. When the user submits sign-up page, we will call sign-up API on the backend.

@Post('/signup')

async create(@Res() response, @Body() createUserDto: CreateUserDto) {

const user = await this.usersService.createUser(createUserDto);

return response.status(HttpStatus.CREATED).json({

user

});

}

The frontend will pass an object for CreateUserDto and our UsersService will use that DTO to create users with the help of a repository.

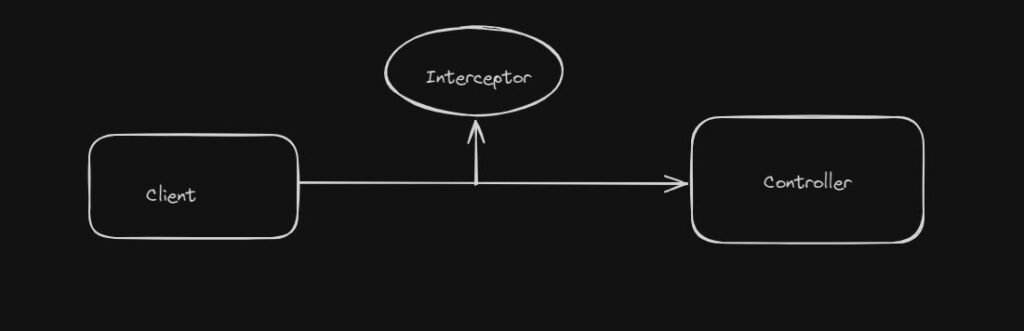

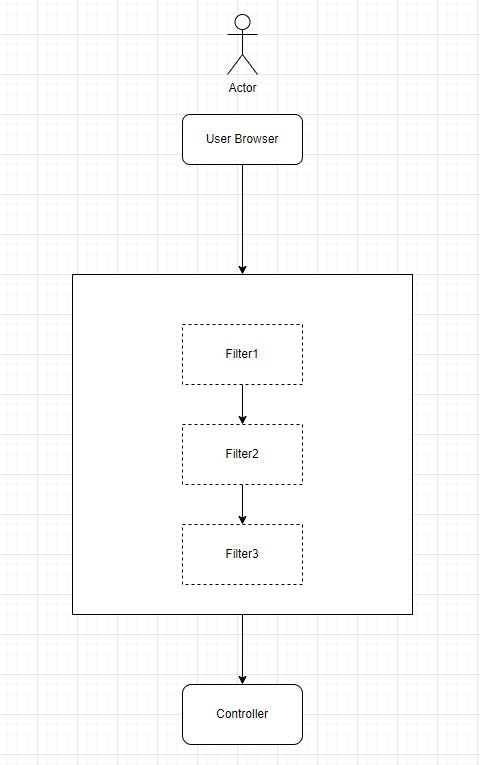

Controller -> Service -> Repository.

Service performs the business logic and the repository interacts with the database.

import { Injectable } from '@nestjs/common';

import { PrismaService } from 'src/common/prisma.service';

import { CreateUserDto } from './dtos/create-user.dto';

import * as bcrypt from 'bcryptjs';

import { UserRepository } from './user.repository';

import { User } from './entities/user.entity';

@Injectable()

export class UsersService {

constructor(private readonly prismaService: PrismaService, private readonly userRepository: UserRepository) {}

async createUser(user: CreateUserDto) {

const hashedPassword = await bcrypt.hash(user.password, 12);

const userToBeCreated = User.createNewUser({

firstName: user.firstName,

lastName: user.lastName,

email: user.email,

password: hashedPassword,

});

return await this.userRepository.save(userToBeCreated);

}

async findById(id: string) {

return await this.userRepository.findById(id);

}

async getByEmail(email: string) {

const user = await this.userRepository.findByEmail(email);

return user;

}

}

As you can see above, while creating a new user, we are storing the hash of the password in the database.

And here is the other API for user signin.

@UseGuards(LocalAuthGuard)

@Post('/signin')

async signIn(@Req() request: RequestWithUser) {

const user = request.user;

return user;

}

I will explain this API in detail soon.

Add Passport and Authentication Strategy

We already discussed the Passport library. Add the following libraries to your backend application:

npm install @nestjs/passport passport @types/passport-local passport-local @types/express

We will use a basic authentication mechanism for our application. Passport calls these mechanisms as strategies. So, we will be using the local strategy.

In NestJS application, we basically implement the local strategy by extending PassportStrategy.

import { Injectable, } from "@nestjs/common";

import { PassportStrategy } from '@nestjs/passport';

import { Strategy } from 'passport-local';

import { User } from "src/users/entities/user.entity";

import { AuthService } from "./auth.service";

@Injectable()

export class LocalStrategy extends PassportStrategy(Strategy) {

constructor(private readonly authService: AuthService) {

super({

usernameField: 'email'

});

}

async validate(email: string, password: string): Promise {

return this.authService.getAuthenticatedUser(email, password);

}

}

For the local strategy, passport calls validate the method with email and password as parameters. Eventually, we will also set up an Authentication Guard.

Validate method uses authService to get authenticated user. This looks like below:

import { HttpException, HttpStatus, Injectable } from '@nestjs/common';

import * as bcrypt from 'bcryptjs';

import { User } from 'src/users/entities/user.entity';

import { UsersService } from 'src/users/users.service';

@Injectable()

export class AuthService {

constructor(private readonly userService: UsersService) {}

async getAuthenticatedUser(email: string, password: string): Promise {

try {

const user = await this.userService.getByEmail(email);

console.log(user);

await this.validatePassword(password, user.password);

return user;

} catch (e) {

throw new HttpException('Invalid Credentials', HttpStatus.BAD_REQUEST);

}

}

async validatePassword(password: string, hashedPassword: string) {

const passwordMatched = await bcrypt.compare(

password,

hashedPassword,

);

if (!passwordMatched) {

throw new HttpException('Invalid Credentials', HttpStatus.BAD_REQUEST);

}

}

}

Passport provides in-built guard AuthGuard. Depending on what strategy you are using, we can extend this AuthGuard as below:

import { Injectable } from "@nestjs/common";

import { AuthGuard } from "@nestjs/passport";

@Injectable()

export class LocalAuthGuard extends AuthGuard('local') {

}

Now in our controller, we will use this guard for authentication purposes. Make sure you have set up your Authentication Module to provide LocalStrategy.

import { Module } from '@nestjs/common';

import { AuthService } from './auth.service';

import { AuthController } from './auth.controller';

import { UsersModule } from 'src/users/users.module';

import { PrismaModule } from 'src/common/prisma.module';

import { LocalStrategy } from './local.strategy';

import { PassportModule } from '@nestjs/passport';

@Module({

imports: [UsersModule, PrismaModule, PassportModule],

controllers: [AuthController],

providers: [AuthService, LocalStrategy]

})

export class AuthModule {}

In our User Controller, we have added @UseGuards(LocalAuthGuard).

Demonstration of Basic Authentication with Passport

We have covered the front end and backend. Let’s take a look at the actual demo of this application now.

Go to frontend directory and start our react app

npm start

It will run by default on port 3000, but I have set port 3001 to use.

start : set PORT=3001 && react-scripts start

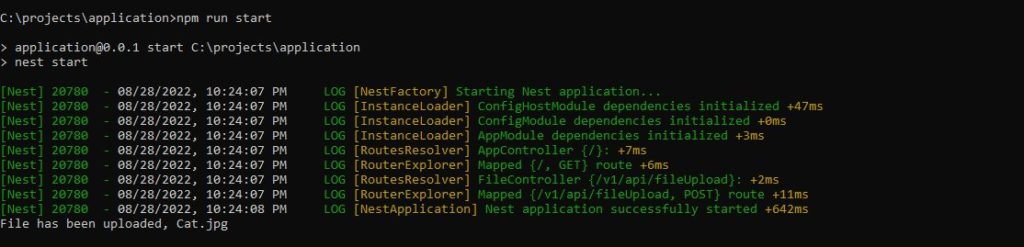

Also start the backend NestJS app

npm run start

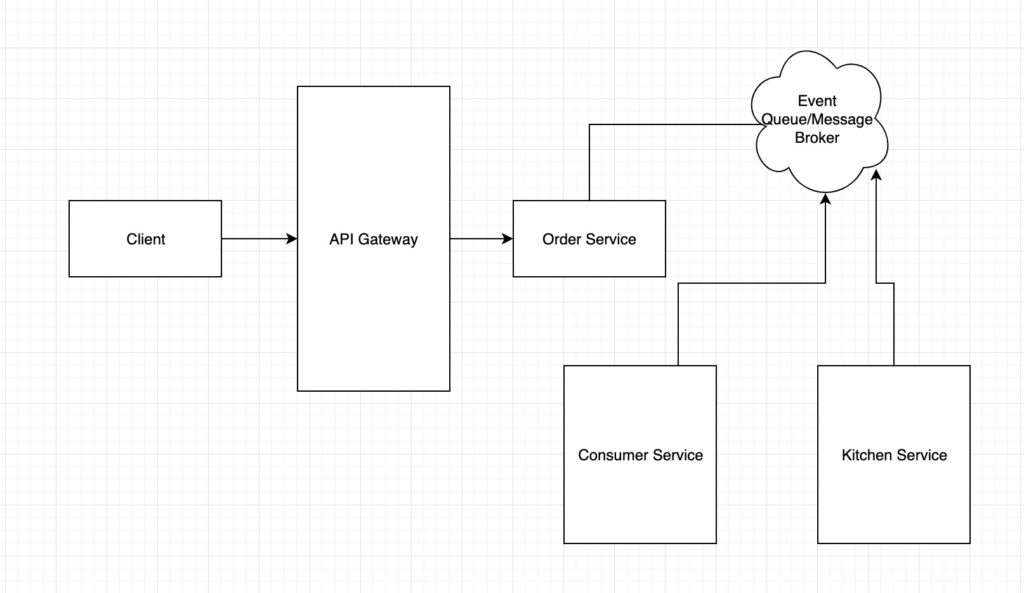

This will run by default on port 3000. I have not changed that and using the default port. The frontend will call backend APIs on port 3000. Of course, in real-world applications, you will have some middleware like a load balancer or gateway to route your API calls.

Once the applications are running, access the demo app at http://localhost:3001 and it should show the login screen.

If you don’t have a user, we will create a new user with sign-up option. Once the user enters credentials, user will see the home page

That’s all. The code for this demo is available here.

Conclusion

In this post, I showed the details of the Passport library and how you can use this library for basic authentication in a Nest JS and React application.