In this post, I demonstrate how to use an event-emitter technique in a NestJS application. This particular technique is part of the NestJS framework. But you can learn about the fundamentals of event-driven architecture and event-sourcing in microservices.

Introduction

Events-based architecture is helping to build scalable applications. The major advantage of this architecture pattern is there is a source and destination of events, aka publisher and subscriber. Subscriber processes the events asynchronously. Frameworks like NestJS provide techniques for emitting the event without any other overhead. Let’s dive into this event-emitter technique.

Event Emitter Technique

NestJS Documentation says “Event Emitter package (@nestjs/event-emitter) provides a simple observer implementation, allowing you to subscribe and listen for various events that occur in your application”

Using this technique will allow you to publish an event within your service/application and let another part of the service use that event for further processing. In this post, I will demonstrate this

- client uploads a file

- an event is emitted for a file uploaded

- another service processes that file based on that event.

Now, the question arises when you want to use this technique and if there are any drawbacks to using this technique. Let’s look into that further.

When To Use

In an application, if you want to do some CPU-heavy OR data-intensive work, you can think of doing that asynchronously. How do we start this asynchronous work though? That’s when event-emitter comes into the picture.

One part of your application will emit an event and another part will listen to that event. The listener will process that event and perform the downstream work.

To understand this better, let’s look at an example.

Demo

Controller –

We have a simple REST controller to upload a file.

@Controller('/v1/api/fileUpload')

export class FileController {

constructor(private fileUploadService: FileUploadService) {}

@Post()

@UseInterceptors(FileInterceptor('file'))

async uploadFile(@UploadedFile() file: Express.Multer.File): Promise {

const uploadedFile = await this.fileUploadService.uploadFile(file.buffer, file.originalname);

console.log('File has been uploaded,', uploadedFile.fileName);

}

}

This controller uses another NestJS technique Interceptor that we have previously seen in this post.

Register the Event Emitter Module –

To use an event emitter, we first register the module for the same in our application module. It will look like below:

EventEmitterModule.forRoot()

Service –

Our controller uses a fileUploadService to upload the file to AWS S3 bucket. Nevertheless, this service will also emit the event after the file has been uploaded.

@Injectable()

export class FileUploadService {

constructor(private prismaService: PrismaService,

private readonly configService: ConfigService,

private eventEmitter: EventEmitter2){}

async uploadFile(dataBuffer: Buffer, fileName: string): Promise {

const s3 = new S3();

const uploadResult = await s3.upload({

Bucket: this.configService.get('AWS_BUCKET_NAME'),

Body: dataBuffer,

Key: `${uuid()}-${fileName}`,

}).promise();

const fileStorageInDB = ({

fileName: fileName,

fileUrl: uploadResult.Location,

key: uploadResult.Key,

});

const filestored = await this.prismaService.fileEntity.create({

data: fileStorageInDB

});

const fileUploadedEvent = new FileUploadedEvent();

fileUploadedEvent.fileEntityId = filestored.id;

fileUploadedEvent.fileName = filestored.fileName;

fileUploadedEvent.fileUrl = filestored.fileUrl;

const internalEventData = ({

eventName: 'user.fileUploaded',

eventStatus: EventStatus.PENDING,

eventPayload: JSON.stringify(fileUploadedEvent),

});

const internalEventCreated = await this.prismaService.internalEvent.create({

data: internalEventData

});

fileUploadedEvent.id = internalEventCreated.id;

if (internalEventCreated) {

console.log('Publishing an internal event');

const emitted = this.eventEmitter.emit(

'user.fileUploaded',

fileUploadedEvent

);

if (emitted) {

console.log('Event emitted');

}

}

return filestored;

}

}

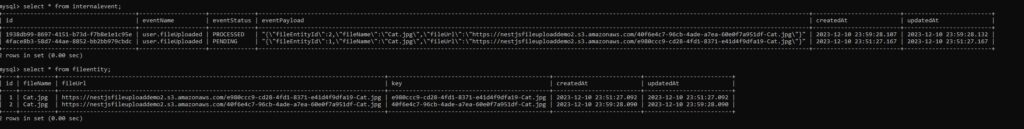

This service code is doing a few things.

- Upload a file to AWS S3

- Store the internal event

user.fileUploaded - Emit that event

One reason we are storing that event in the database is to know exactly when the event was emitted and if we ever want to reprocess the same event if we can emit that.

Anyhow, we are using EventEmitter2 class in our constructor. To be able to use this class, we should make sure we install @nestjs/event-emitter dependency in our Nest project.

Event Listener –

We have a service that is emitting the event, but we will also need a listener service that will process the emitted event. So let’s write our listener service.

Our event class looks like this:

export class FileUploadedEvent {

id: string;

fileName: string;

fileUrl: string;

fileEntityId: number;

}

And our listener class will look like below:

@Injectable()

export class FileUploadedListener {

constructor(private prismaService: PrismaService){}

@OnEvent('user.fileUploaded')

async handleFileUploadedEvent(event: FileUploadedEvent) {

console.log('File has been uploaded');

console.log(event);

await this.prismaService.internalEvent.update(

{

where: {

id: event.id,

},

data: {

eventStatus: EventStatus.PROCESSED,

}

}

);

console.log('File will get processed');

}

}

To be able to listen to emitted events, we use annotation @OnEvent. Just like this listener, we can add more listeners for the same event and each listener might do its own set of work.

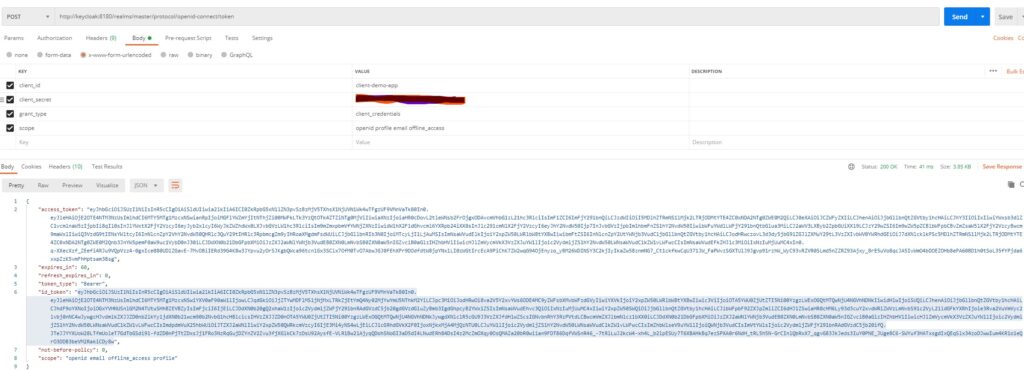

Now, if we run our application and upload a file, an event will be emitted and listened on. We can see the database entries for the event.

Conclusion

In this post, I showed how to use the Event Emitter technique in a NestJS Application.